Exploring the Origins and Principles of Systems Biology

Written on

Chapter 1: The Emergence of Systems Biology

In the realm of systems biology, biological processes, characteristics, pathways, and structures are examined through a distinctive perspective. Mihajlo Mesarović, a Serbian scientist and a professor specializing in systems engineering and mathematics, is recognized for coining the term "systems biology" in 1968. Mesarović's work aimed to apply systems theory to gain insights into biological entities.

It is often suggested that Ludwig Von Bertalanffy, who introduced the theory of open systems to clarify life phenomena, was a pioneer in merging systems theory with biology. Bertalanffy, an Austrian biologist, proposed that while classical thermodynamic laws apply to closed systems, they do not necessarily hold for "open systems," such as living organisms. In his framework, these open systems are defined by negative entropy, a property that accounts for growth, differentiation, and increasing complexity in living beings.

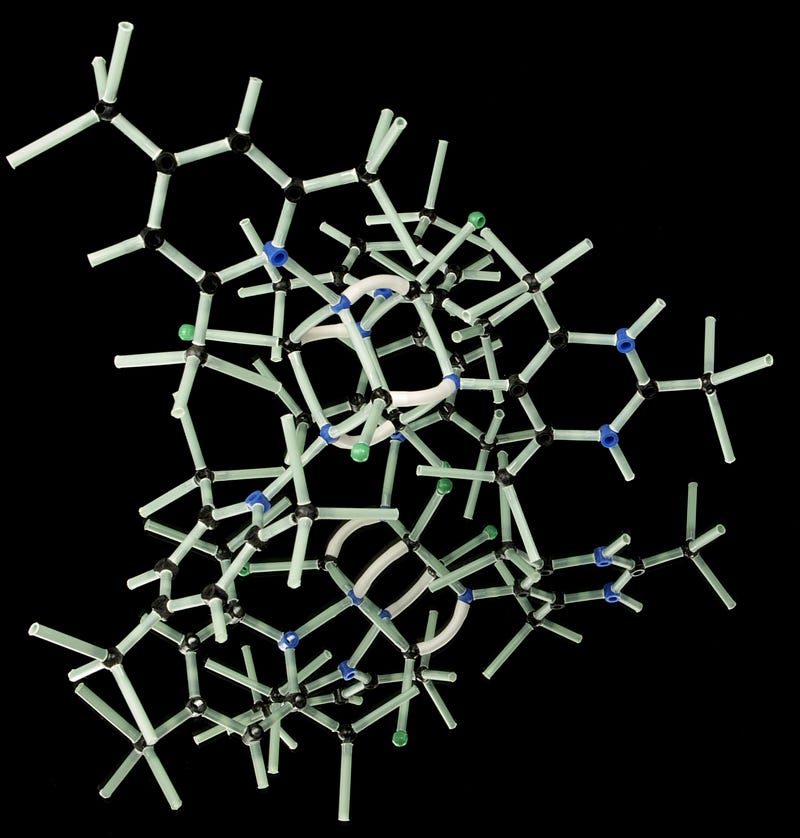

Photo by Photoholgic on Unsplash

The notion of entropy, along with the second law of thermodynamics, necessitates a significant reinterpretation in light of our emerging grasp of statistical mechanics in nature. The term entropy itself poses challenges when we trace its etymological roots.

To delve into the origins of the term "entropy," we can refer to Wikipedia. In 1865, Rudolf Clausius introduced the term to describe "the differential of a quantity dependent on the configuration of the system," deriving it from the Greek word for ‘transformation’. He also referred to it as “transformational content” (Verwandlungsinhalt), paralleling it with his concept of thermal and ergonal content (Wärme- und Werkinhalt), ultimately favoring "entropy" as it closely resembles the term energy, which he considered nearly "analogous in their physical significance." This term was created by substituting the root of the word for work (ergon) with that for transformation (tropy).

Clausius found that non-usable energy increases as steam moves from the inlet to the exhaust of a steam engine. The prefix "en-", as in 'energy', combined with the Greek word for turning or change (tropē), which he translated into German as Verwandlung, often understood in English as transformation, led Clausius to coin the term entropy in 1865. This word entered the English lexicon in 1868.

It is evident that Rudolf Clausius viewed entropy as a transformation operator within a system. The Austrian physicist Ludwig Boltzmann, alongside figures like Josiah Willard Gibbs and Max Planck, later provided a statistical framework for entropy, marking a significant advancement. Boltzmann's equation connects the entropy of an ideal gas to its multiplicity, or the number of real states corresponding to the gas's macro-state.

Boltzmann Equation for Entropy

Originally developed by Ludwig Boltzmann between 1872 and 1875, this equation was later refined by Max Planck in 1900. The equation's main contribution is its establishment of a connection between entropy and the probabilistic nature of phenomena. Beyond this relationship, several intriguing observations arise. It expresses this connection using a logarithmic application of probability, which serves as a natural condensation and extrapolation of variables. Logarithms enable us to navigate the breadth and depth of measurements more effectively.

Consequently, entropy can be viewed through a statistical lens, reflecting the probabilistic balance between order and disorder within a system. Its logarithmic model emphasizes randomness and disorder rather than order. If represented through more systematic probability models, it could signify a more ordered perspective.

Thus, the concept of entropy remains a fertile ground for ongoing exploration and interpretation. As we attempt to integrate the framework of entropy into systems theory and systems biology, it prompts a reevaluation of fundamental principles. What truly underpins the communication among biological processes, properties, pathways, and structures? Is it inherent order, external disorder, a blend of both, or the continuous differentiation among systems that governs these communication mechanisms? The inquiry begins here!