# The Role of Generalization in ChatGPT and Visual AI Technologies

Written on

Chapter 1: Understanding Generalization in AI

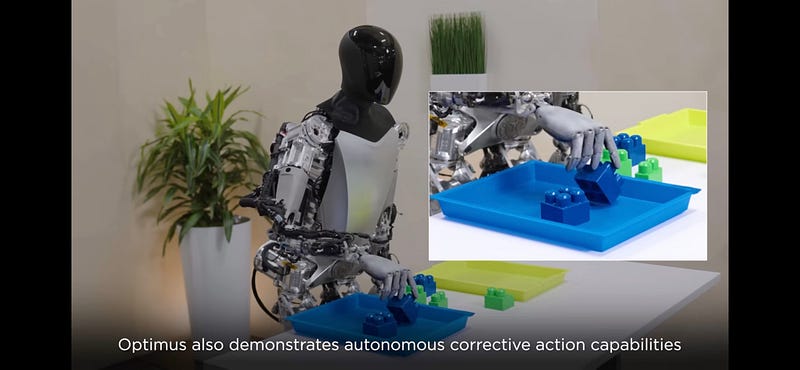

Generalization plays a pivotal role in the functionality of both ChatGPT and visual AI systems. The latest Optimus bot, along with the Full Self-Driving (FSD) technology, showcases the ability to learn in a 'task-agnostic' manner. This means that the systems don't rely on rigid coding or precise choreographed movements but can learn from observing relevant videos.

This is significant because generalization allows AI to function without the necessity of being trained on every conceivable scenario or object. This concept is fundamentally enabled by deep learning technologies.

How Does It Work?

The Optimus bot does not require exposure to every conceivable arrangement of blocks to understand how to sort or build. It only needs sufficient training to grasp the fundamental concepts, enabling it to make adjustments as it learns. With adequate experience, the bot will be capable of sorting, constructing, and utilizing various tools, adapting to nearly any components it encounters. This learning process will continue until it receives updated fine-tuning training. In time, these systems may learn autonomously, similar to human learning processes, although expert guidance, similar to human education, remains invaluable.

Neural Net Generalization

Generalization is a critical factor in the achievements of contemporary AI systems. The Optimus bot and FSD version 12 operate on end-to-end neural networks, meaning they learn from experiences and attempt to apply that knowledge in real-time. For instance, when prompted to sort or unsort blocks, the bot engages its training to respond accordingly. It is likely equipped with an integrated Large Language Model (LLM) akin to GPT, allowing for bidirectional communication. Currently, it accepts prompts but the specifics of its training methods remain speculative—possibly involving simulations based on human motion capture.

The bot's learning process mirrors that of LLMs and humans. It doesn't need to have encountered every possible scenario to perform its tasks; instead, it relies on the core idea it has internalized. The training phase is just the beginning; the real challenge lies in how the bot applies its learning as it continues to evolve.

Chapter 2: The Mechanisms of Generalization

Generalization occurs through two primary aspects: the hierarchical nature of tasks and the probabilistic dependencies of predicted actions based on current states and past experiences. This principle applies to FSD, androids, and LLMs alike.

LLMs, like GPT, learn to parse sentences within their neural structures, a process likely analogous to human cognition:

Over time, LLMs become adept at understanding the implications of connecting words like 'because,' 'instead,' and 'or.' The order of these words becomes less significant, demonstrating that LLMs do not merely repeat information but understand language construction and its implications in innovative contexts.

Back to Visual and Motor Functions

In visual AI, such as FSD or the Tesla android, the precise location of objects in relation to the training data is less critical. Neural networks excel at breaking down problems into their fundamental components, learned from prior experiences.

To illustrate, when tasked with sorting blocks, the bot first determines which block to approach and initiates movement toward it. It does not require prior direct experience with that specific arrangement; instead, it effectively interpolates between similar scenarios learned during training. This interpolation reflects a 'compression' of its training data, allowing it to understand the underlying principles rather than memorize every detail.

The bot's predictions about its next actions occur numerous times per second as it moves, continually refining its approach based on immediate feedback. Just like humans, who don't calculate the precise trajectory of a moving object, the bot adjusts its actions based on probabilistic assessments of its environment.

Big Picture Planning

Concerns regarding whether the bot can formulate overarching plans because it only focuses on immediate tasks are unfounded. Like sophisticated LLMs, such as GPT, the neural network considers the broader context. For example, when GPT begins drafting a summary, it already has a structured approach in mind, determining the number of strategies to present based on the input context.

Similarly, the android bot will initiate actions based on an overarching plan, which could involve assembling components of a laptop. As it progresses, it becomes increasingly committed to its chosen task and the numerous sub-goals involved. This process remains probabilistic, allowing the bot to adapt even when encountering novel scenarios.

In conclusion, a bot equipped with memory and goal-tracking capabilities will exhibit even greater effectiveness, akin to LLMs that excel in complex tasks using 'Chain-of-Thought' methodologies. The future looks promising, and I anticipate seeing significant advancements in the Optimus bot within the next few months.