The European Union's Strive for Comprehensive AI Regulation

Written on

Chapter 1: Introduction to AI Regulations in the EU

The European Union has initiated a robust framework to regulate artificial intelligence (AI) across various sectors. On April 21, 2021, the EU introduced unprecedented rules that dictate how businesses and governments can leverage AI technologies, which have been recognized as significant yet ethically complex advancements in science.

The draft regulations, unveiled at a press conference in Brussels, propose restrictions on AI applications in critical areas such as autonomous vehicles, employment decisions, educational admissions, and exam evaluations. These guidelines also address the deployment of AI in law enforcement and judicial systems, categorized as "high risk" due to potential threats to public safety and fundamental rights.

Margrethe Vestager, the European Commission's executive vice president responsible for digital policy, emphasized, "The E.U. is leading the development of new global norms to ensure that A.I. can be trusted." Certain applications, particularly live facial recognition in public settings, will face outright bans, with limited exceptions for national security and similar uses.

This regulatory framework has significant implications for major tech companies such as Amazon, Google, Facebook, and Microsoft, as well as numerous other firms utilizing AI in sectors like healthcare, finance, and insurance. Governments already incorporate variations of this technology in law enforcement and the distribution of public services.

Chapter 2: The Proposed Legal Framework

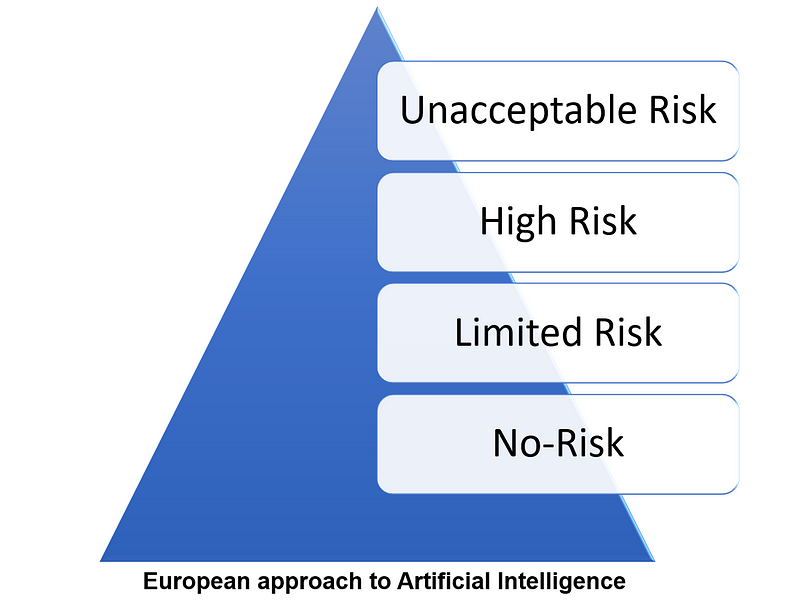

The proposed regulatory measures will not merely focus on the technology of AI itself; rather, they will consider the intended applications and their implications. The EU aims to adopt a risk-based approach, where the severity of regulations corresponds to the potential risks associated with specific AI uses.

- Unacceptable Risk: AI systems posing clear threats to safety, livelihoods, and individual rights will be prohibited. For instance, applications that manipulate human behavior or systems that assign a "social score" will not be permitted. Vestager remarked, “Uses like a toy employing voice assistance to endanger a child are unacceptable in Europe.”

- High Risk: Applications linked to critical infrastructure, vocational training, security components, employment, essential services (like credit assessments), law enforcement, and immigration management will face stringent obligations before market entry. “AI systems determining mortgage eligibility or those used in self-driving vehicles will undergo rigorous scrutiny due to their potential risks,” Vestager added.

- Limited Risk: Some systems will require specific transparency measures, such as chatbots. “Applications like a chatbot that assists with ticket bookings will be allowed, but must adhere to transparency standards,” Vestager noted.

- No Risk: Applications like video games or spam filters will face no additional restrictions beyond existing consumer protection laws. Vestager stated, “Our framework permits the free use of such applications without further regulations.”

The first video titled "The EU's AI Act Explained" provides a detailed overview of the newly proposed regulations and their implications for businesses and individuals.

Chapter 3: Implications of the New Regulations

Companies that fail to comply with these regulations, which are expected to take several years for debate and implementation, could incur fines reaching up to 6% of their global revenue. AI, which enables machines to learn independently by processing vast datasets, is viewed as one of the most transformative technologies by experts across various fields.

As AI systems grow increasingly complex, understanding their decision-making processes may become more challenging, raising ethical concerns. Critics argue that AI could reinforce societal biases, infringe on privacy, and lead to job displacement. Vestager asserted, “In AI, trust is essential; it’s not just a luxury.” The EU is positioning itself as a leader in establishing global norms for trustworthy AI.

The second video titled "The EU agrees on AI regulations: What will it mean for people and businesses in the EU?" discusses the potential outcomes of these regulations on the public and businesses.

Conclusion: A Global Leader in AI Regulation

In summary, the European Union aims to solidify its role as a formidable regulator of the technology sector through these draft rules. The EU has already implemented the most rigorous data privacy laws worldwide and continues to explore additional antitrust and content moderation policies.

Businesses must proactively prepare for compliance, particularly regarding high-risk AI applications, which will necessitate external audits and third-party evaluations to ensure alignment with EU principles. As seen in other global regulatory efforts, these standards may influence other nations to align their regulations concerning Responsible AI with those of the EU.

Vestager concluded, “While Europe may not have led the last wave of digital innovation, it has the potential to spearhead the next one, focusing on industrial data and advancing AI in sectors where Europe excels, such as manufacturing and healthcare.” Ensuring that AI technologies align with European values and principles is paramount as they evolve.

Additional Resources

For further reading on Responsible and Explainable AI, consider exploring the following articles:

- Can we explain AI? An Introduction to Explainable Artificial Intelligence.

- A quick Introduction to Responsible AI or rAI.

- Introduction to the 4 Principles of Responsible AI for Business Leaders.

- How to implement the Principles of Responsible AI in practice, according to Microsoft.

- Speech by Executive Vice-President Vestager on fostering a European approach to Artificial Intelligence.

- Proposal for a Regulation on a European approach for Artificial Intelligence.